AI Companion / Generating Systems Models

Crafting Engineering Strategy has a chapter on systems modeling

along with a number of examples. Those examples focus on using the lethain/systems python library

to generate models using a Jupyter notebook,

with examples in lethain/eng-strategy-models.

That is a reasonable approach, but it also requires learning the systems library’s

syntax for modeling.

This chapter looks at how to use an LLM to write the system model syntax for you,

without requiring learning how to use that syntax in great detail.

In addition to being specific instructions for working with the systems library,

this is also a generalizable pattern for using LLMs to work with domain-specific languages.

We’ll cover:

- Adding problem-specific resources to your context window to prime LLMs to solve complex problems

- Using an LLM to write systems model in a proper

systemsspecification - Having an LLM walk you through running a script to run a systems model

- Using a model context protocol (MCP) server to simplify the process of running a systems model, and an Claude Artifact to explore the results

The following instructions specifically build on the Anthropic Claude.ai Project setup, but should be adaptable to other approaches, especially when support for local MCP servers is more widely adapted to environments other than Claude Desktop.

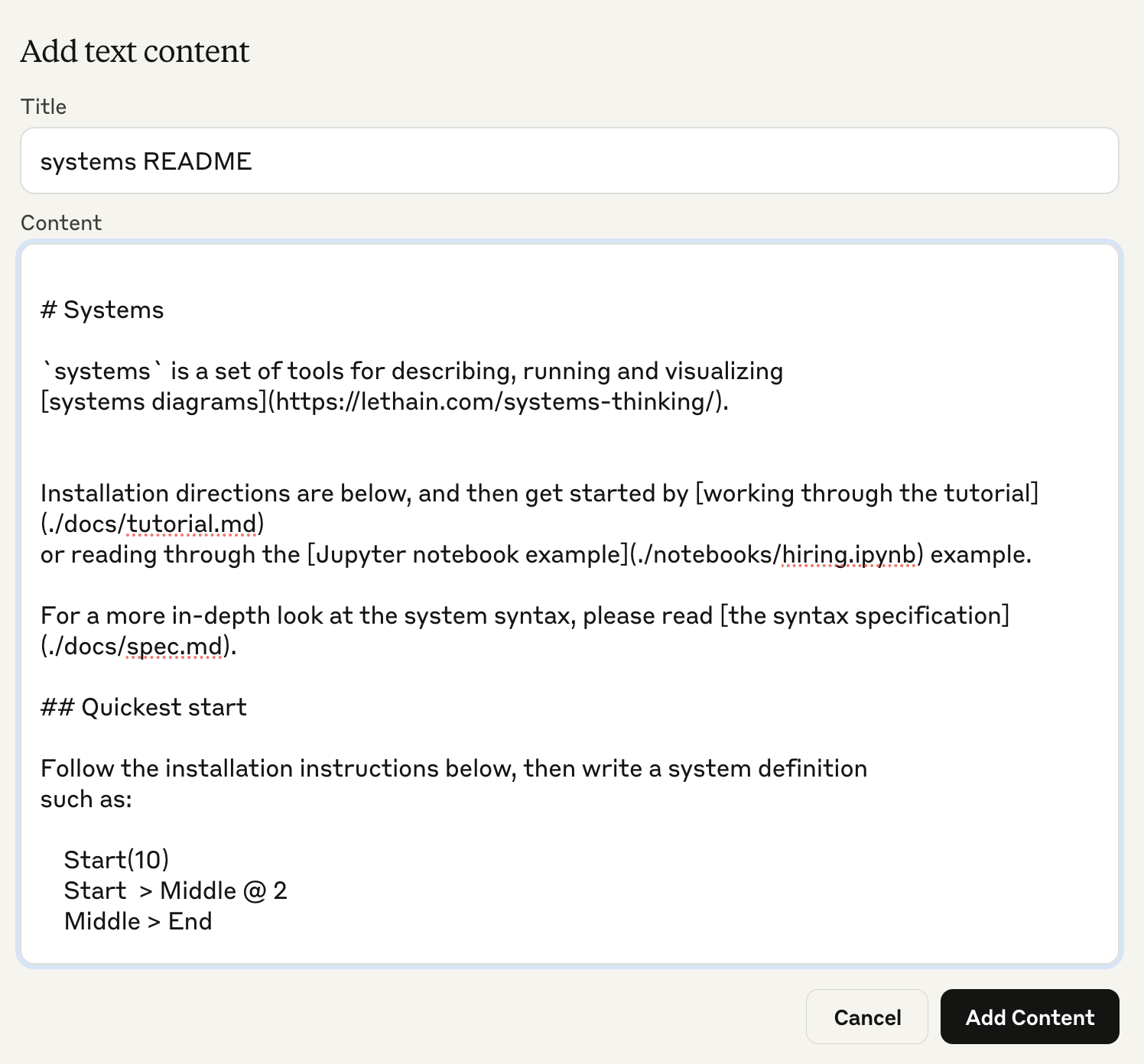

Adding systems modeling instructions

In the first chapter, Foundation of Collaboration, we configured a project to include Crafting Engineering Strategy in the context window. We’re going to continue that technique, adding more detailed instructions for creating systems models into our context window.

Start by retrieving a text copy of the

README.md from lethain/systems.

That file explains the syntax and usage of the library in detail, and ought to be enough instruction for

the model to specify models.

Once you have the file, upload it into your Anthropic project with your LLM-optimized

version of Crafting Engineering Strategy.

Adding README.md to project's input context

This will make the README’s contents available in the context window, serving as in-context learning (ICL) examples for generating models.

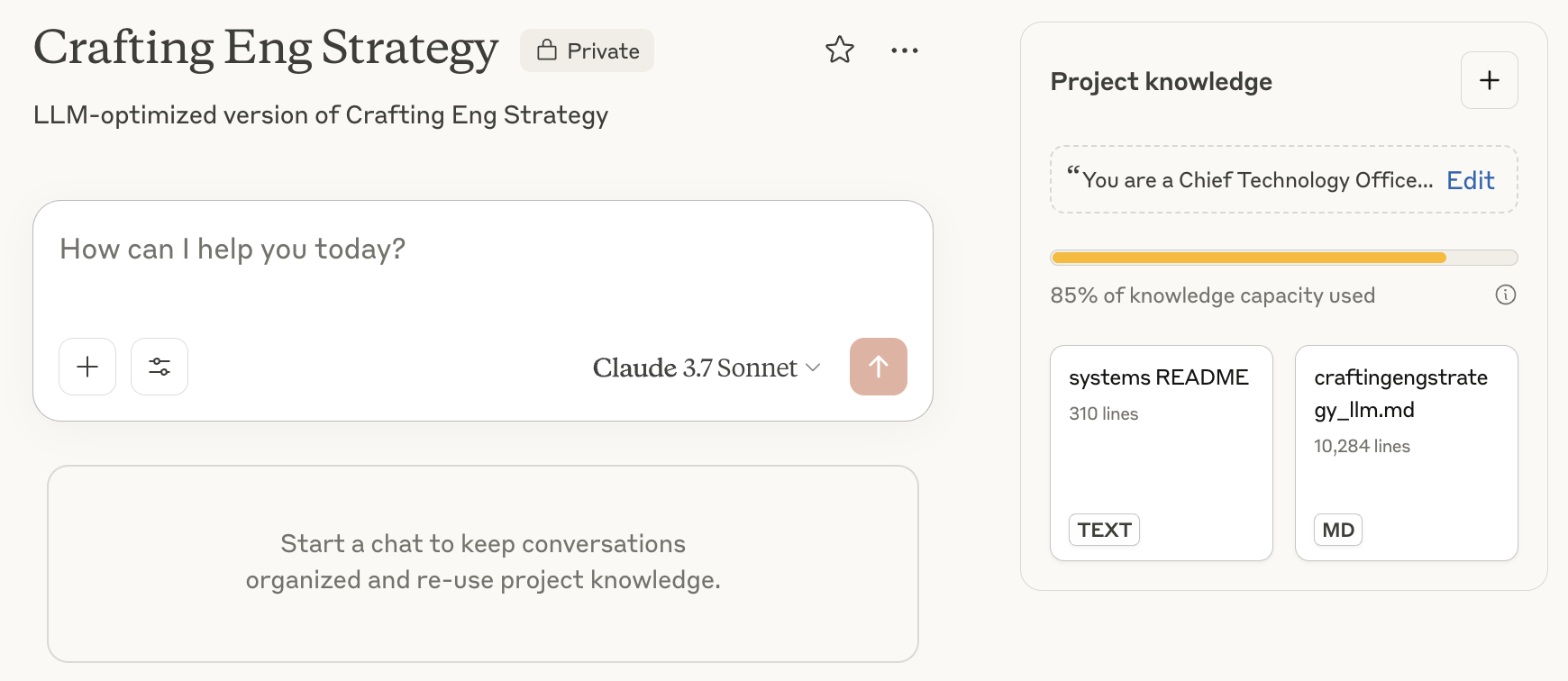

Project overview with both book and README contents

After adding the README, your project will include both its contents and also the book’s chapters.

Now it’s time to start writing a systems model. This is similar to the hiring model

specified in Modeling a hiring funnel with Systems library,

but that model is not included in Crafting Engineering Strategy or the README.md.

Creating a model

Start by writing a general description of the system you want to create. The prompt I started with is:

Help me write a systems model specification for

systems library to model a hiring pipeline system where:

1. First stock is infinite stock of "potential candidates"

2. Second stock is "outreaches", with an in flow of 10 from

"potential candidates"

3. Third stock is "interested", with an in flow of 50%

from "outreaches"

4. Fourth stock is "active", with an in flow of 50%

from "interested"

5. Fifth stock is "offers" with an in flow of 10% from "active"

6. Sixth and final stock is "hires" with an in flow of

70% from "offers"

All of these flows with percentages should be modeled using

"Leak" flows, for example:

Outreaches > Interested @ Leak(0.5)

This is a detailed description, although only the last few sentences about Leaks requires specifically knowing the systems syntax.

We’ll come back to the “Leak” versus “Conversion” distinction a bit later.

The biggest thing to take away is that, even if we don’t need to know systems syntax,

you absolutely do still need to understand how systems models work to write this specification.

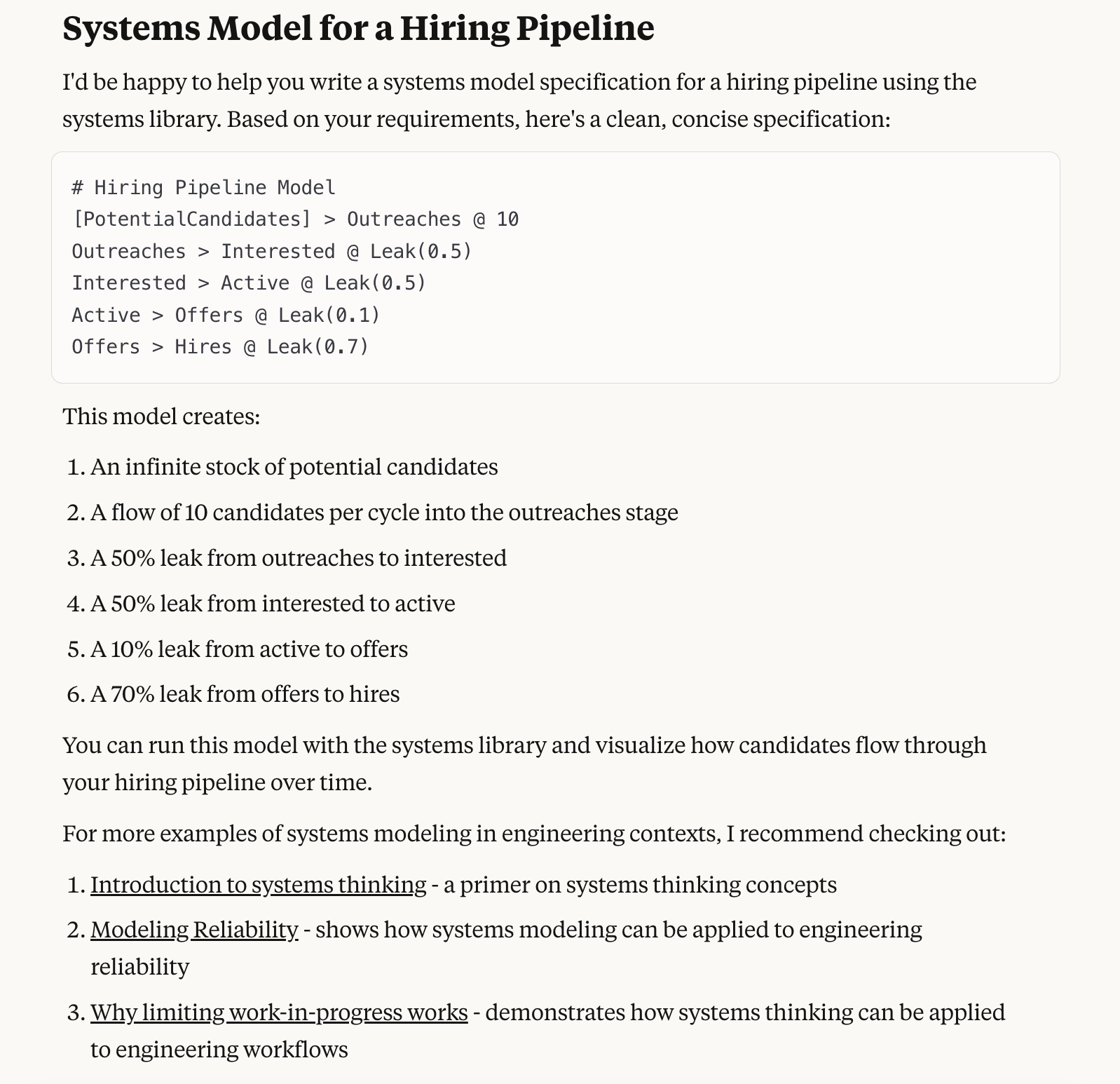

From that prompt, Claude generates a complete, usable model, as well as links to a handful of examples of other systems models. If you ran into a problem, those examples might be helpful to learn from, but you could also just convert them into Markdown and include them in your prompt to help the LLM better solve any errors it generated.

Claude.ai generates working systems syntax from natural language prompt

Now that we have a working prompt, you may have recognized that the prior hint to use

Leak doesn’t really make that much sense.

That’s because Leaks model a portion of candidates progressing forward

in the hiring pipeline, unfortunately they model the candidates who don’t progress as staying in the prior

stock.

That isn’t quite right: candidates who don’t move forward in a hiring funnel don’t stay in the

prior stock, they leave the hiring funnel. For example, a candidate who doesn’t get an offer doesn’t stay in

Active, they are removed from the hiring process entirely.

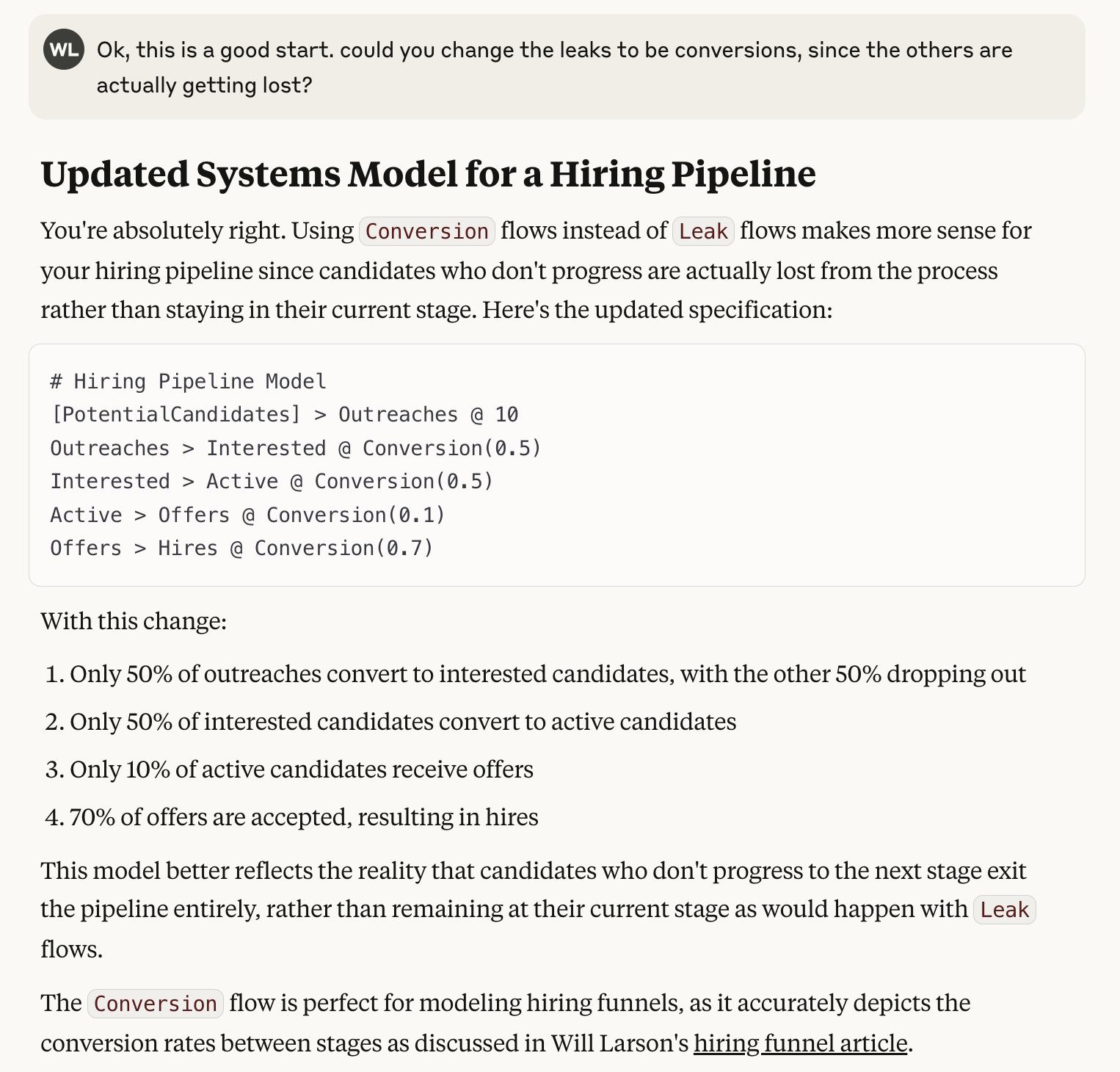

Let’s fix this by asking the LLM to use a Conversion instead of a Leak, using a prompt

such as:

Ok, this is a good start. Could you change the leaks

to be conversions, since the others are actually getting lost?

We can see the output of that prompt showing a fixed model.

Claude.ai switching from Leaks to Conversions

As we’ve shown in this section, if you include enough appropriate documentation, LLMs are surprisingly good at writing valid syntax. The challenge is understanding your goals well enough to provide that documentation.

Running the model

Once you have the working syntax for a systems model, the next step is getting

data out of that model.

While I personally run models in a Jupyter Notebook

as demonstrated in lethain/eng-strategy-models,

you can also rely on the LLM to walk you through running the models locally.

Continuing the above prompt, let’s ask for instructions to run our new model:

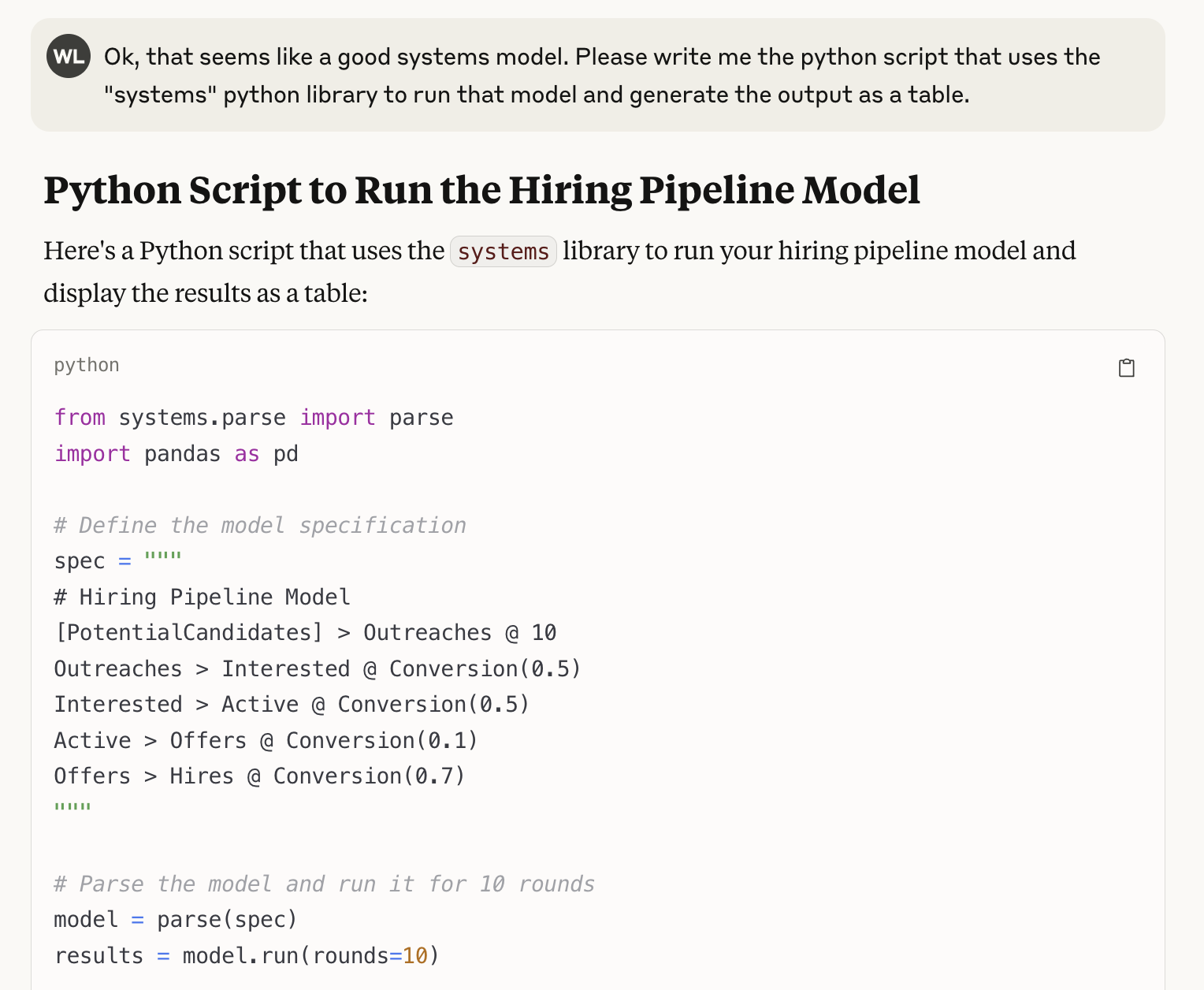

Ok, that seems like a good systems model. Please write

me the python script that uses the "systems" python

library to run that model and generate the output as a table.

This generates instructions for installing systems locally,

along with a script to run the model.

The full script is available in this Gist.

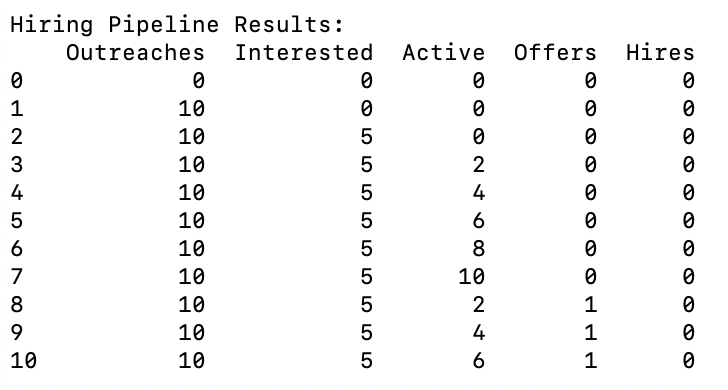

Textual table of output from a systems model

If you follow the steps to install and run the script, you’ll get this table as output.

Textual table showing output from systems model

You can then include that table, along with a prompt asking Claude.ai to create an artifact to explore the data:

This is the library output, please produce an artifact

that shows chart of active candidates over time:

{table data omitted for brevity}

This will then generate this Artifact visualizing the dataset as a chart.

Claude.ai Artifact showing a chart of system model output

This approach works, but it’s rather awkward, requiring moving between the chat interface and the terminal. What we really want is an approach that’s better than a Jupter notebook, and this is decidedly worse. Fortunately, we can do better.

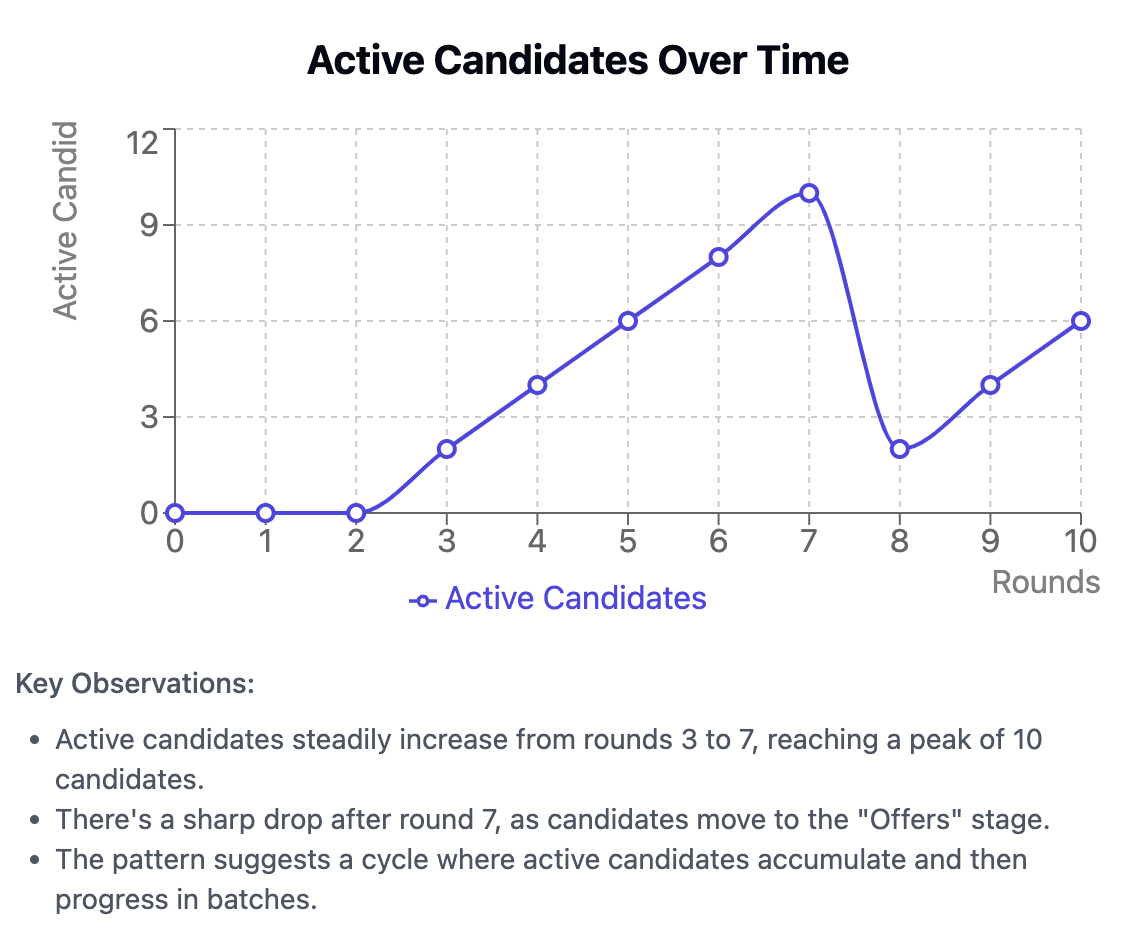

Using a Model Context Protocol server

Now that we’ve completed te experiment of relying on the LLM to guide us through every step of the model, run and render pipeline, we can admit something important: some of this wasn’t that useful. A better approach would allow us to run every step from within the chat interface.

Fortunately, we can provide that experience by creating a Model Context Protocol (MCP) server

and exposing it to the LLM. MCP servers provide tools to the LLM, and also instructions on when

and how to use that tool. A simple MCP server might offer a tool that retrieves the current weather,

performs an API call against a service, or queries search index for results.

In this case, an MCP server can also use the systems library to run a systems model,

as shown in the lethain/systems-mcp library.

That repository includes installation instructions to configuring it to run with your Claude Desktop setup that we configured in the Foundations chapter. Once you have it installed, you can run and render a model as described in the following steps.

This MCP server exposes two tools, load_systems_documentation and run_systems_model.

The first of those tools, load_systems_documentation, injects project specific documentation

and examples into the context window to serve as in-context learning, to improve the quality

of generated models.

The other tool, run_systems_model, takes a model specification and runs it,

returning the results in JSON format.

Here is an example of Claude.ai using both tools to generate a systems model for a social network. Note that none of the examples relate to social networks, so this is a genuinely new creation rather than a recreation of an explicit item within the loaded context.

Claude.ai using two tools to generate a systems model

After creating the output from a systems model, we still need to explore that data. In a Jupyter Notebook, we would render different cuts in still charts. In Claude.ai, we can ask it to generate an Artifact to explore this dataset via:

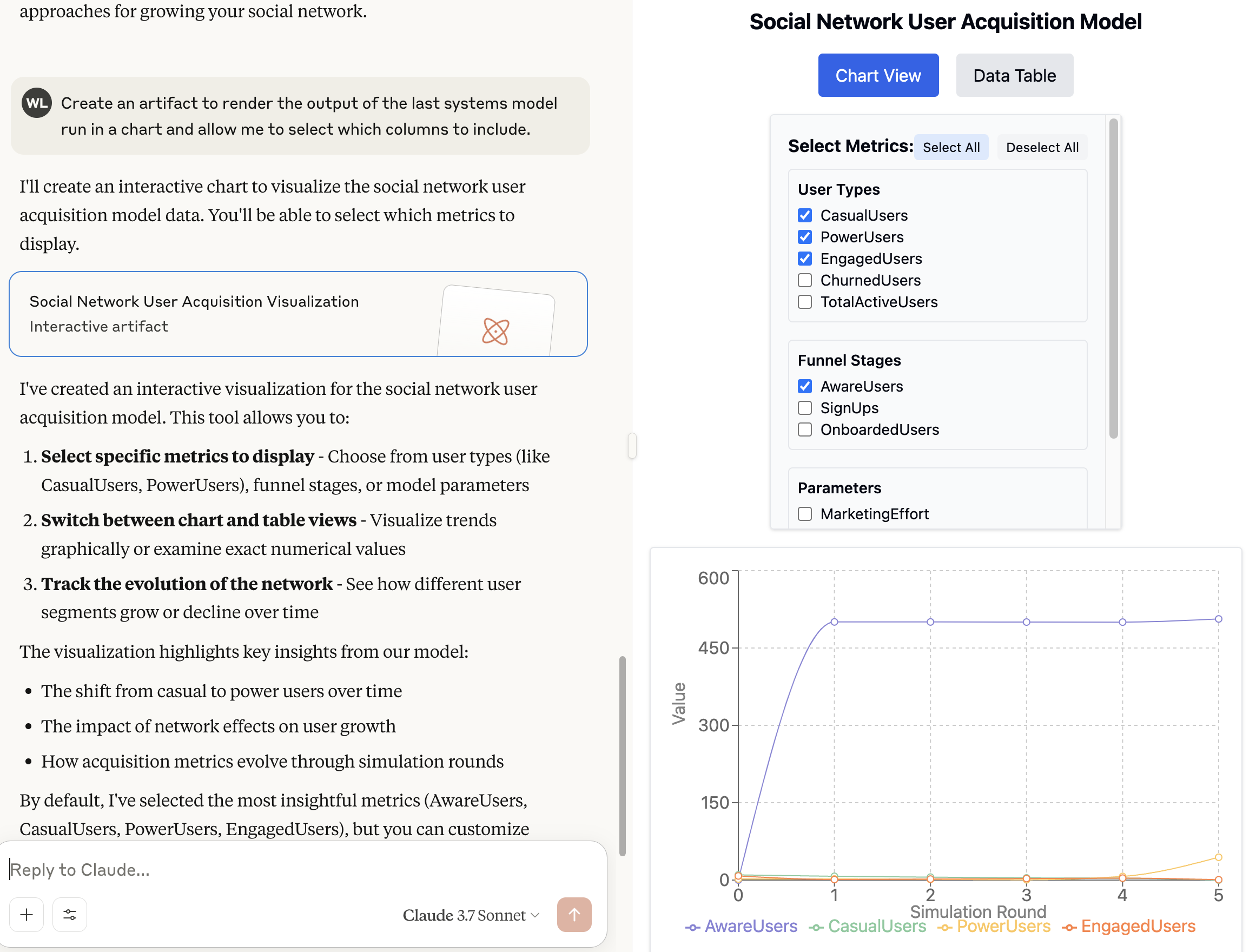

Create an artifact to render the output of the last

systems model run in a chart and allow me to select

which columns to include.

This will generate an artifact along these lines, allowing you to select among which columns to render.

Claude.ai Artifact for exploring system model results

At this point, we’ve created something that is more powerful than a Jupyter Notebook–because it will generate the systems model for you–and also allows the same sort of exploratory scenarios. If we apply a more critical eye, we can correctly observe that the Artifact only included five rounds rather than all fifty. We can fix it by asking:

Include all 50 rounds in the artifact, not just 5

However, we really shouldn’t need to ask. So it’s not quite perfect, but you can make it work fairly well. It’s also easy to imagine a near future where tools and Artifacts are better connected, allowing the LLM to directly connect tools to Artifacts rather than requiring the LLM to indirectly connect them by replicating data.

Summary

In this chapter, we started with a complex problem, and broke it down into components that an LLM can solve. We used documentation to prime the LLM to write correct syntax. We loaded a Model Context Protocol server to push math-heavy work into software that knows how to quickly and accurately perform the math. We used a Claude Artifact to not just chart the resulting data, but to explore that rendered data.

This combination of approaches not only solved the particular problem of generating and running systems models, it’s also a generalizable approach to making messy problems fit with LLM-driven approaches. What worked here will work elsewhere, including–we hope–for helping us generate Wardley maps in the next chapter.

Next chapter: AI Companion / Generating Wardley Maps

Previous chapter: AI Companion / Reviewing and Editing

First published at lethain.com/ces-ai-generate-systems-model-llm/