AI Companion / Reviewing and Editing

In the last chapter, we co-wrote a strategy with an LLM. Now, we’re going to review that strategy, looking for areas that we can improve. Ideally, every organization would have someone ready to provide feedback on your documents quickly, but that’s often not the case whether they’re busy or simply don’t exist, and these techniques are a useful stand-in during those cases.

In this chapter we’ll cover:

- Identifying weaknesses in a strategy document using an LLM

- Using an LLM to summarize and narrow feedback to provide to the strategy’s author

- Advising an LLM on how to address raised feedback to rewrite an existing strategy based on your evaluation of the flagged concerns

By the chapter’s end, you’ll have identified issues in the last chapter’s strategy, communicated those issues concisely, and rewritten the strategy to address that feedback.

Skimming for flaws

Taking the text of the strategy, we’re going to use a reasoning model to look for gaps. We’ll do that by using a reasoning model such as OpenAI’s o3 or Anthropic’s Claude Opus 4 with extended thinking, and the prompt:

Look for reasoning errors and unsubstantiated claims in this strategy:

{text of strategy document}

Running in the project we set up in Foundations of collaboration, this prompt identified a number of reasoning errors, unsubstantiated claims, and missing elements. Let’s review each category of identified issues.

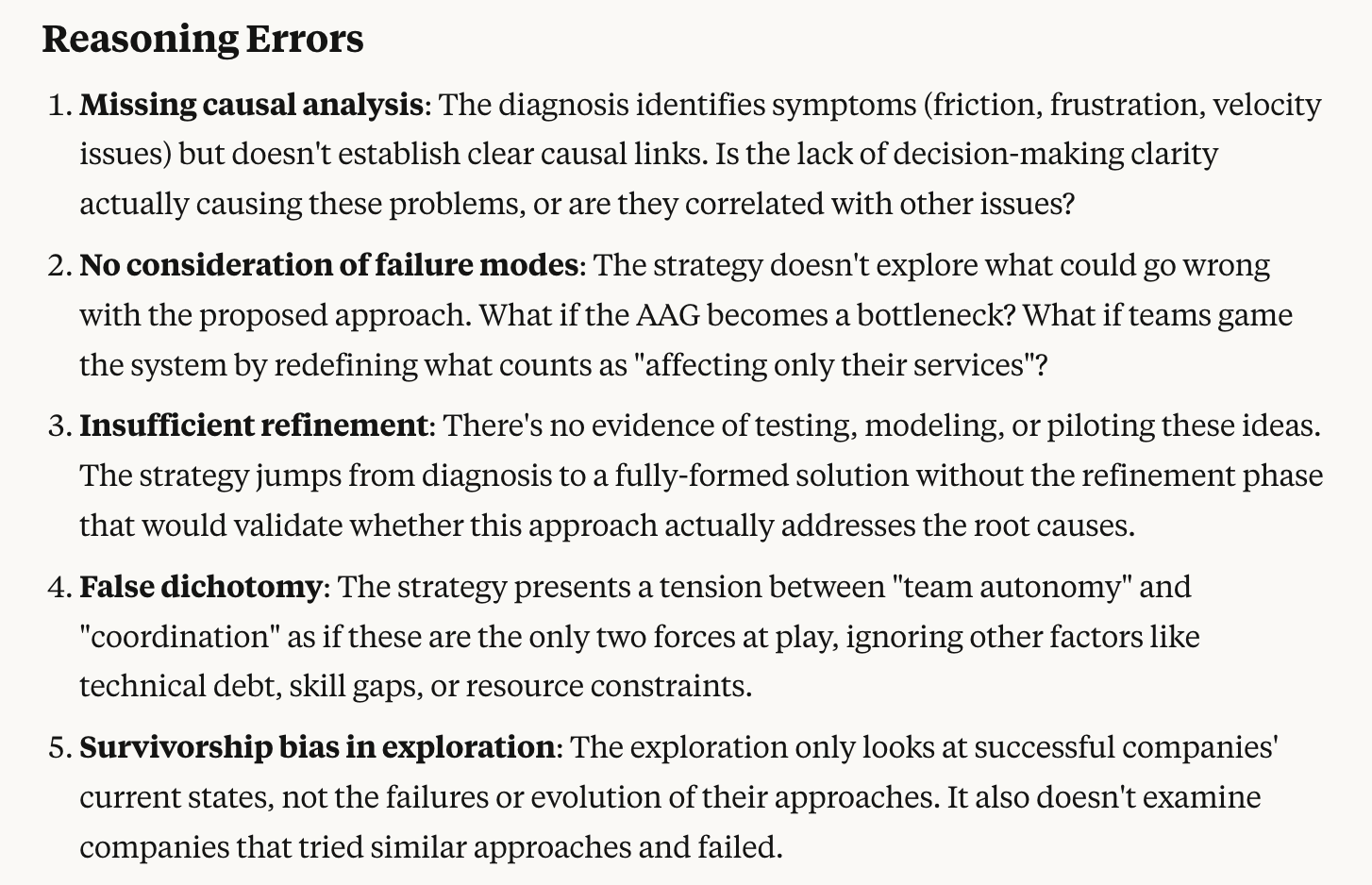

First, it found a number of reasoning errors. These are errors in the thinking underpinning the strategy, ranging from lack of causal analysis (for example, do the diagnoses actually tie to the policies?) to a survivorship bias in the exploration (for example, what about considering examples where the policies go poorly, in addition to those where they were successful?).

Reasoning errors identified by Claude Opus 4

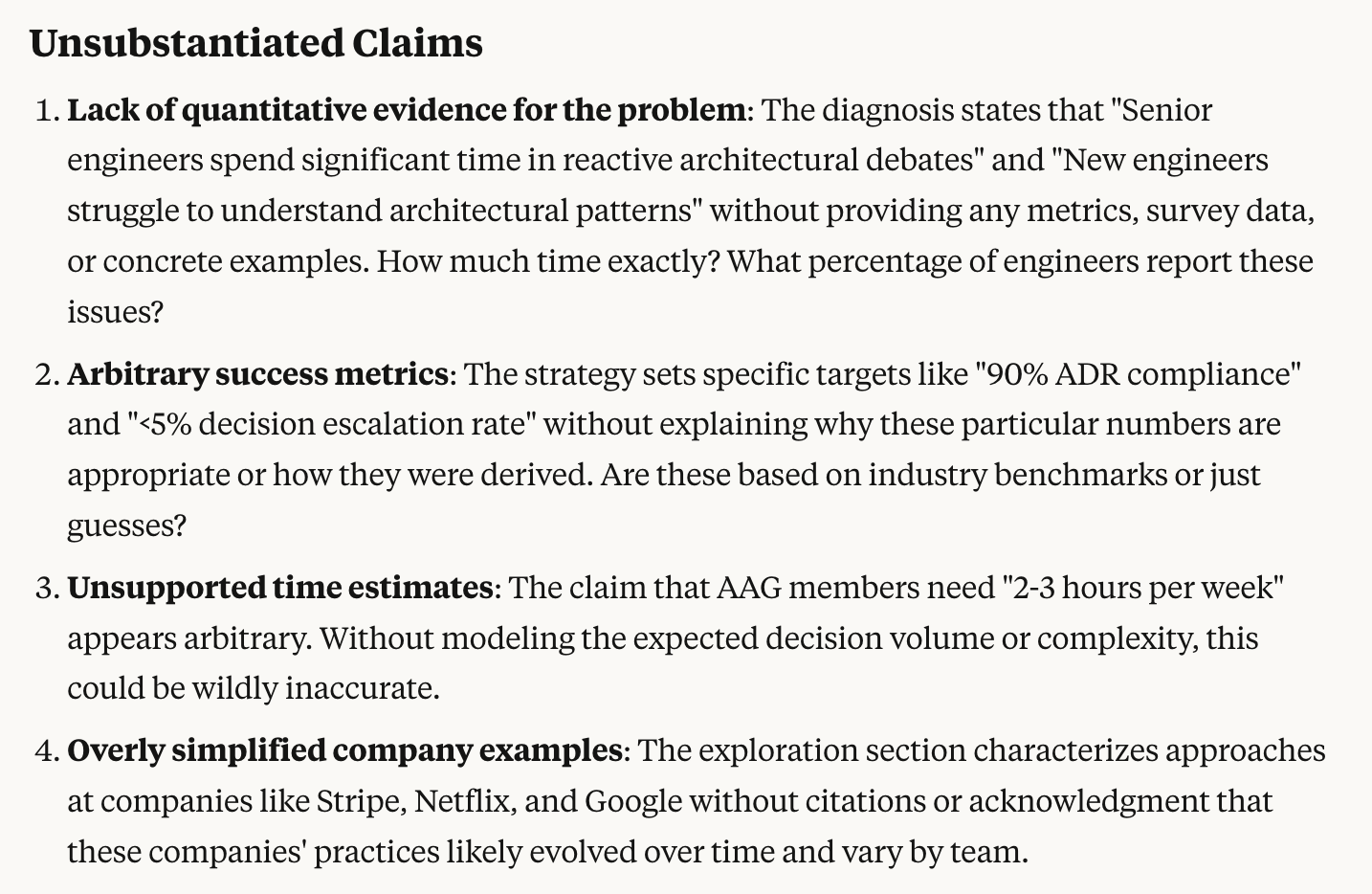

Next, it identified a number of unsubstantiated claims. These are a mix of issues in the diagnosis, such as the lack of evidence that senior engineers are spending significant time in architectural debates, and arbitrary goals in suggested policies. Both categories are valid issues that ought to be addressed in an effective strategy document.

Unsubstantiated claims flagged by Claude Opus 4

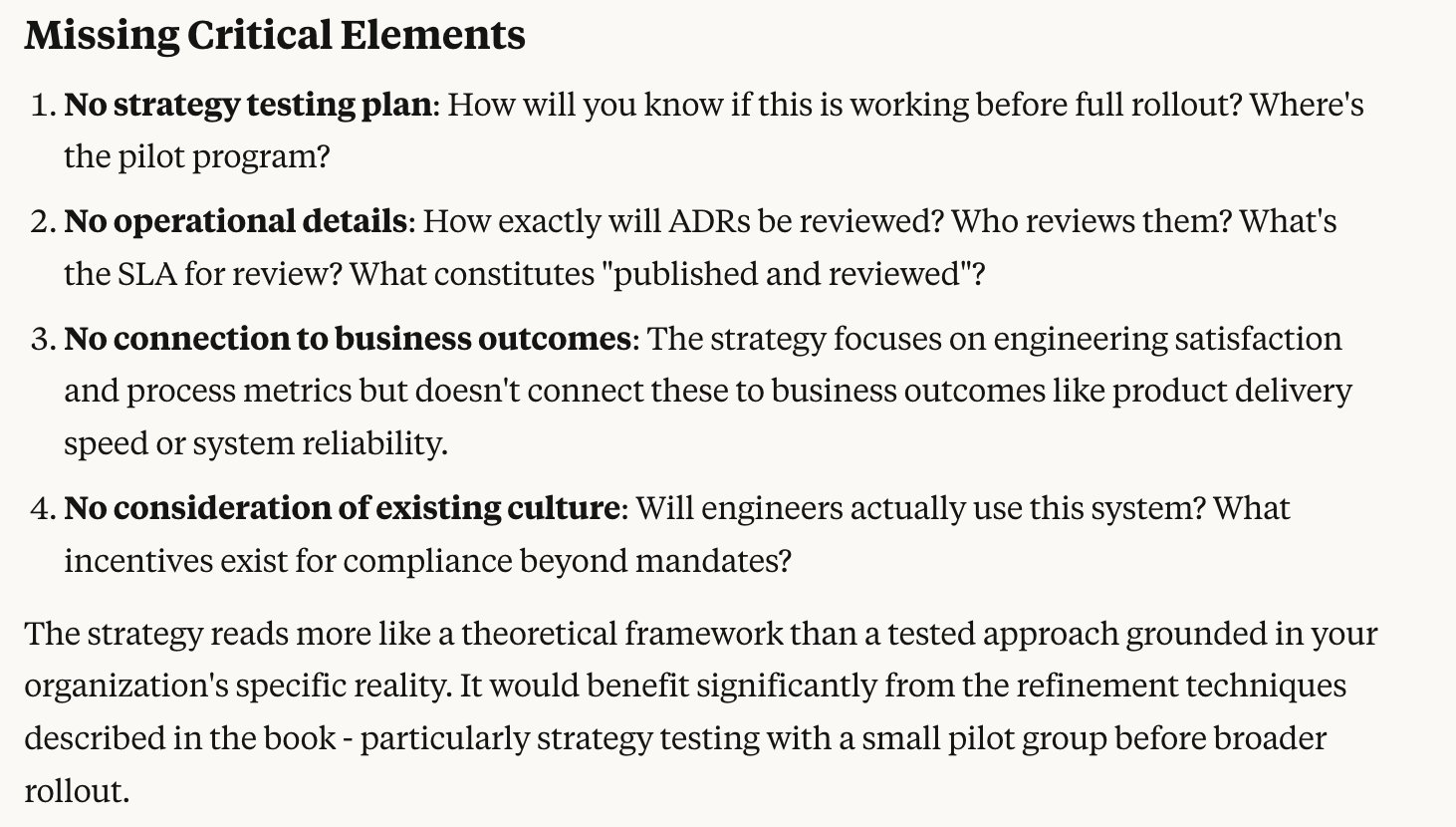

Most valuably, it also identified a series of entirely missing elements. Of particular value, it identified that there was no strategy testing plan, which I would agree is probably the most important gap in the entire plan.

Missing elements detected by Claude Opus 4

The feedback here is genuinely very useful, and identifies a number of places where we definitely should improve the initial writeup. The biggest weakness in this feedback is that there’s simply too much of it. Fortunately, we can whittle down the volume to what’s most useful to the author.

Summarizing feedback

Imagine for a moment, that this is a strategy that someone else wrote, that we want to provide feedback on. We wouldn’t want to provide all of this feedback, instead we’d want to identify the top 3-5 issues, and make suggestions on how they might be addressed.

Reading through the above feedback, the three biggest issues from my perspective are:

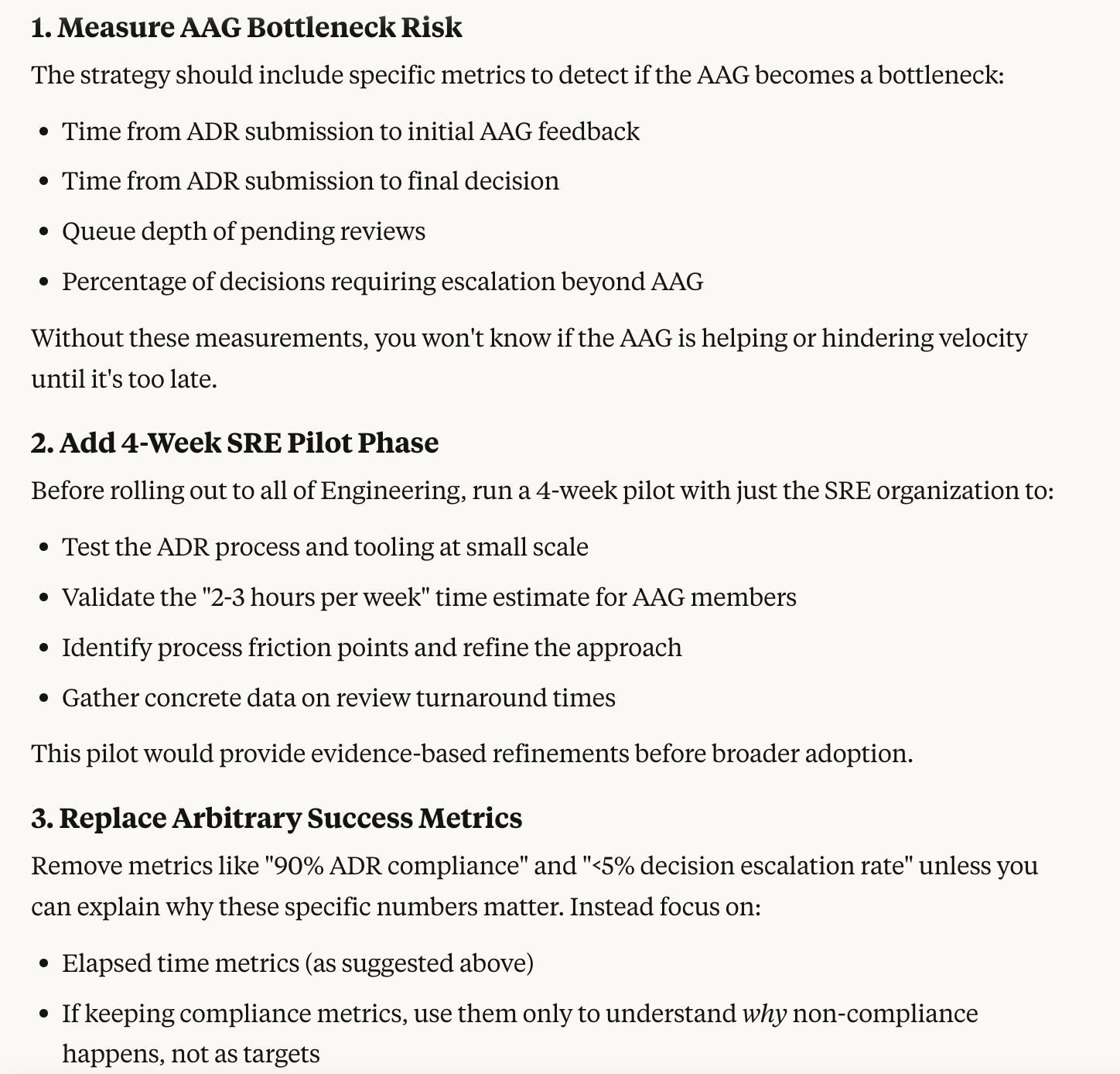

- Identify what we can measure to determine if the AAG becomes a bottleneck (e.g. time from requested review to review being performed)

- Add a 4 week pilot phase for the SRE organization, which we’ll use to evaluate and refine expanding this to all of Engineering

- Eliminate all arbitrary success metrics other than those related to elapsed time (e.g. 90% ADR compliance is not a great measure unless we’re using that exclusively to understand why the 10% aren’t happening)

We can then ask the LLM to summarize our feedback concisely, along with our suggestions on how the authors might address the feedback. Continuing the conversation that generated the initial feedback from the LLM, try a prompt like this:

Rewrite the above feedback to focus on these three areas of feedback,

eliminate most other feedback unless it seems essential, and make it

as concise as possible:

1. Identify what we can measure to determine if the AAG becomes

a bottleneck (e.g. time from requested review to review

being performed)

2. Add a 4 week pilot phase for the SRE organization, which

we’ll use to evaluate and refine expanding this to all

of Engineering

3. Eliminate all arbitrary success metrics other than those

related to elapsed time (e.g. 90% ADR compliance is not

a great measure unless we’re using that exclusively to

understand why the 10% aren’t happening)

The LLM then generates this focused version of the prior feedback, available in Gist. This is much more along the lines of what I would provide to a strategy’s author than the voluminous original feedback.

Revised strategy feedback from LLM

You might want to rework the tone a bit with another pass, but generally I think the particulars make sense. This overall approach, of using the LLM to brainstorm ideas and then focus down on the particulars, is an effective approach.

Incorporating feedback

For this section, we’re changing perspectives once again, returning to the perspective of the strategy’s author, who has just received the above feedback.

Going to our Crafting Engineering Strategy project, we’ll add both the original strategy document and the feedback to the chat, and start with this prompt explaining how to specifically address the feedback:

I want to incorporate the below feedback into our strategy document

on engineering decision making. Follow this advice on how to

incorporate the feedback:

1. "Measure AAG Bottleneck Risk" -- prioritize addressing this,

we should measure using time feedback is requested in a Slack

channel until feedback is provided by the AAG as measured by

replying with a new document in the Slack thread

2. "Add 4-Week SRE Pilot Phase" -- yes, we should do this,

add this as note below the policy/operations section rather

than making a big deal about it

3. "Replace Arbitrary Success Metrics" -- remove any instances

of arbitrary success metrics, don't try to suggest better ones

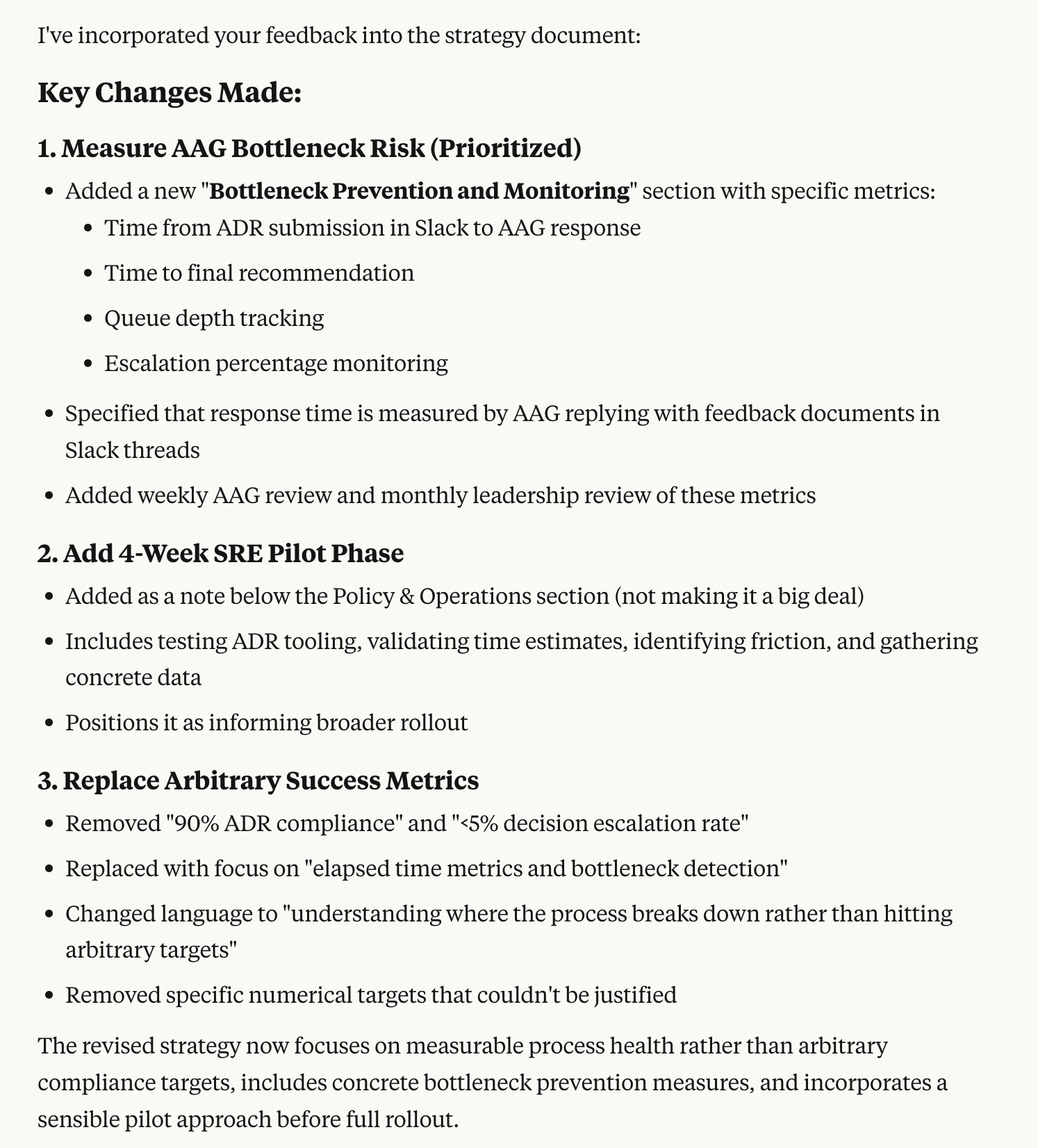

This generated an explanation of how the feedback was addressed, and also the full revised strategy document.

Summary of how the feedback was addressed

This worked well, and is a good reminder to apply the LLM-as-Intern technique: frontier LLMs are fairly good at applying your explicitly stated direction, but they’re still missing your good judgment. Be clear, and you’ll get the best possible output.

Summary

In this chapter, we started with a strategy draft, generated feedback on, refined that feedback, and then decided how to incorporate that feedback into a revised draft of the strategy. While I wouldn’t describe the strategy as perfect, it is significantly improved from the initial version, was quickly done, and we weren’t constrained by anyone else getting around to providing feedback.

If you work in an organization with significant senior engineering bandwidth, then I’m certain you could already generate a document of this caliber quickly, but not this fast. More importantly, I am certain your organization could write a better strategy than this one, but many organizations simply don’t have the bandwidth or the staff to do that. Maybe you’re the only Staff-plus engineer at your company, or maybe you’re trying to iterate on a draft before the other knowledgable engineer gets off a busy project. In those cases, I find these techniques surprisingly useful.

First published at lethain.com/ces-ai-review-strategy-with-an-llm/